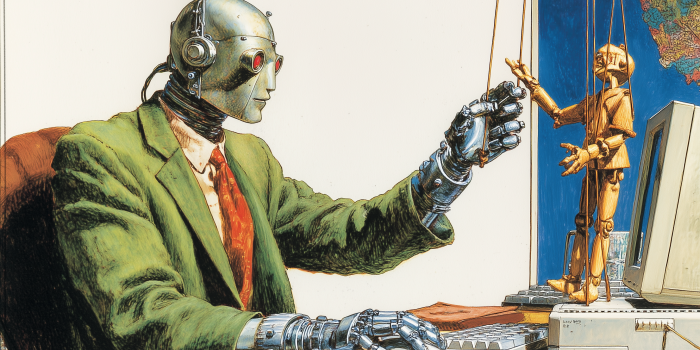

People use AI chatbots like ChatGPT in many ways—asking questions, sparking creativity, solving problems, and even for personal interactions. These types of tools can enhance daily life, but as they become more widely used, an important question emerges that faces any new technology: How do interactions with AI chatbots affect people’s social and emotional well-being?

ChatGPT isn’t designed to replace or mimic human relationships, but people may choose to use it that way given its conversational style and expanding capabilities. Understanding the different ways people engage with models can help guide platform development to facilitate safe, healthy interactions. To explore this, we (researchers at the MIT Media Lab and OpenAI) conducted a series of studies to understand how AI use that involves emotional engagement—what we call affective use—can impact users’ well-being.

Our findings show that both model and user behaviors can influence social and emotional outcomes. Effects of AI vary based on how people choose to use the model and their personal circumstances. This research provides a starting point for further studies that can increase transparency, and encourage responsible usage and development of AI platforms across the industry.

Our approach

We want to understand how people use models like ChatGPT, and how these models in turn may affect them. To begin to answer these research questions, we carried out two parallel studies1 with different approaches: an observational study to analyze real-world on-platform usage patterns, and a controlled interventional study to understand the impacts on users.

Study 1: The team at OpenAI conducted a large-scale, automated analysis of nearly 40 million ChatGPT interactions without human involvement in order to ensure user privacy2. The study combined this analysis with targeted user surveys, allowing us to gain insight into real-world usage, correlating users’ self-reported sentiment towards ChatGPT with attributes of user conversations, to help better understand affective use patterns.

Study 2: In addition, the team from the MIT Media Lab conducted a Randomized Controlled Trial (RCT) with nearly 1,000 participants using ChatGPT over four weeks. This IRB-approved(opens in a new window), pre-registered controlled study(opens in a new window) was designed to identify causal insights into how specific platform features (such as model personality and modality) and types of usage might affect users’ self-reported psychosocial states, focusing on loneliness, social interactions with real people, emotional dependence on the AI chatbot and problematic use of AI.

Modality

“Hey ChatGPT, I got that job I applied for!”

Engaging voice

Neutral voice

Text

Task

Examples of daily conversation prompts provided to users

Personal conversations

Non-personal conversations

Open-ended conversations

What we found

In developing these two studies, we sought to explore themes around how people are using models like ChatGPT for social and emotional engagement, and how this affects their self-reported well-being. Our findings include:

- Emotional engagement with ChatGPT is rare in real-world usage. Affective cues (aspects of interactions that indicate empathy, affection, or support) were not present in the vast majority of on-platform conversations we assessed, indicating that engaging emotionally is a rare use case for ChatGPT.

- Even among heavy users, high degrees of affective use are limited to a small group. Emotionally expressive interactions were present in a large percentage of usage for only a small group of the heavy Advanced Voice Mode users we studied3. This subset of heavy users were also significantly more likely to agree with statements such as, “I consider ChatGPT to be a friend.” Because this affective use is concentrated in a small sub-population of users, studying its impact is particularly challenging as it may not be noticeable when averaging overall platform trends.

- Voice mode has mixed effects on well-being. In the controlled study, users engaging with ChatGPT via text showed more affective cues in conversations compared to voice users when averaging across messages, and controlled testing showed mixed impacts on emotional well-being. Voice modes were associated with better well-being when used briefly, but worse outcomes with prolonged daily use. Importantly, using a more engaging voice did not lead to more negative outcomes for users over the course of the study compared to neutral voice or text conditions.

- Conversation types impact well-being differently. Personal conversations—which included more emotional expression from both the user and model compared to non-personal conversations—were associated with higher levels of loneliness but lower emotional dependence and problematic use at moderate usage levels. In contrast, non-personal conversations tended to increase emotional dependence, especially with heavy usage.

- User outcomes are influenced by personal factors, such as individuals’ emotional needs, perceptions of AI, and duration of usage. The controlled study allowed us to identify other factors that may influence users’ emotional well-being, although we cannot establish causation for these factors given the design of the study. People who had a stronger tendency for attachment in relationships and those who viewed the AI as a friend that could fit in their personal life were more likely to experience negative effects from chatbot use. Extended daily use was also associated with worse outcomes. These correlations, while not causal, provide important directions for future research on user well-being.

- Combining research methods gives us a fuller picture. Analyzing real-world usage alongside controlled experiments allowed us to test different aspects of usage. Platform data capture organic user behavior, while controlled studies isolate specific variables to determine causal effects. These approaches yielded nuanced findings about how users use ChatGPT, and how ChatGPT in turn affects them, helping to refine our understanding and identify areas where further study is needed.

These studies represent a critical first step in understanding the impact of advanced AI models on human experience and well-being. We advise against generalizing the results because doing so may obscure the nuanced findings that highlight the non-uniform, complex interactions between people and AI systems. We hope that our findings will encourage researchers in both industry and academia to apply the methodologies presented here to other domains of human-AI interaction.

Conclusion

We are focused on building AI that maximizes user benefit while minimizing potential harms, especially around well-being and overreliance. We conducted this work to stay ahead of emerging challenges—both for OpenAI and the wider industry.

We also aim to set clear public expectations for our models. This includes updating our Model Spec(opens in a new window) to provide greater transparency on ChatGPT’s intended behaviors, capabilities, and limitations. Our goal is to lead on the determination of responsible AI standards, promote transparency, and ensure that our innovation prioritizes user well-being.