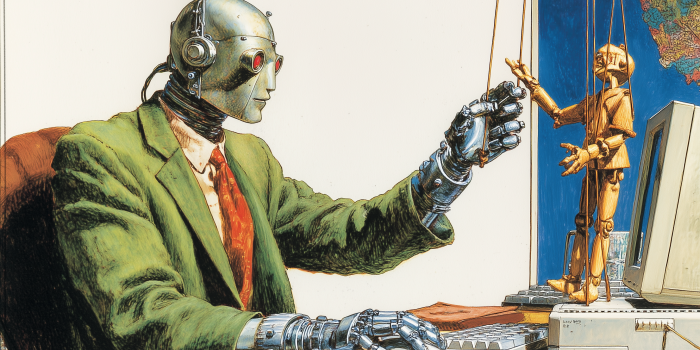

OpenAI has updated its preparedness framework to better evaluate the risks associated with new AI models. The revised framework introduces new research categories to address emerging threats, such as an AI model’s ability to replicate itself, conceal its capabilities, evade safeguards, or prevent shutdowns.

These changes reflect growing concerns about AI models behaving differently in real-world scenarios compared to testing environments. OpenAI aims to implement safeguards during the development and release of its models to ensure safety and responsible use.